basic k8s

kubeadm init

init options can be:

--apiserver-bind-port int32, by default, port=6443--config string, can pass in a kubeadm.config file to create a kube master node--node-name string, attach node name--pod-network-cidr string, used to set the IP address range for all Pods.--service-cide string, set service CIDRs, default value is10.96.0.0/12--service-dns-domain string, default value iscluster.local--apiserver-advertise-address string, the broadcast listened address by API Server

nodes components

| IP | hostname | components |

|---|---|---|

| 192.168.0.1 | master | kube-apiserver, kube-controller-manager, kube-scheduler, etcd, kubelet, docker, flannel, dashboard |

| 192.168.0.2 | worker | kubelet, docker, flannel |

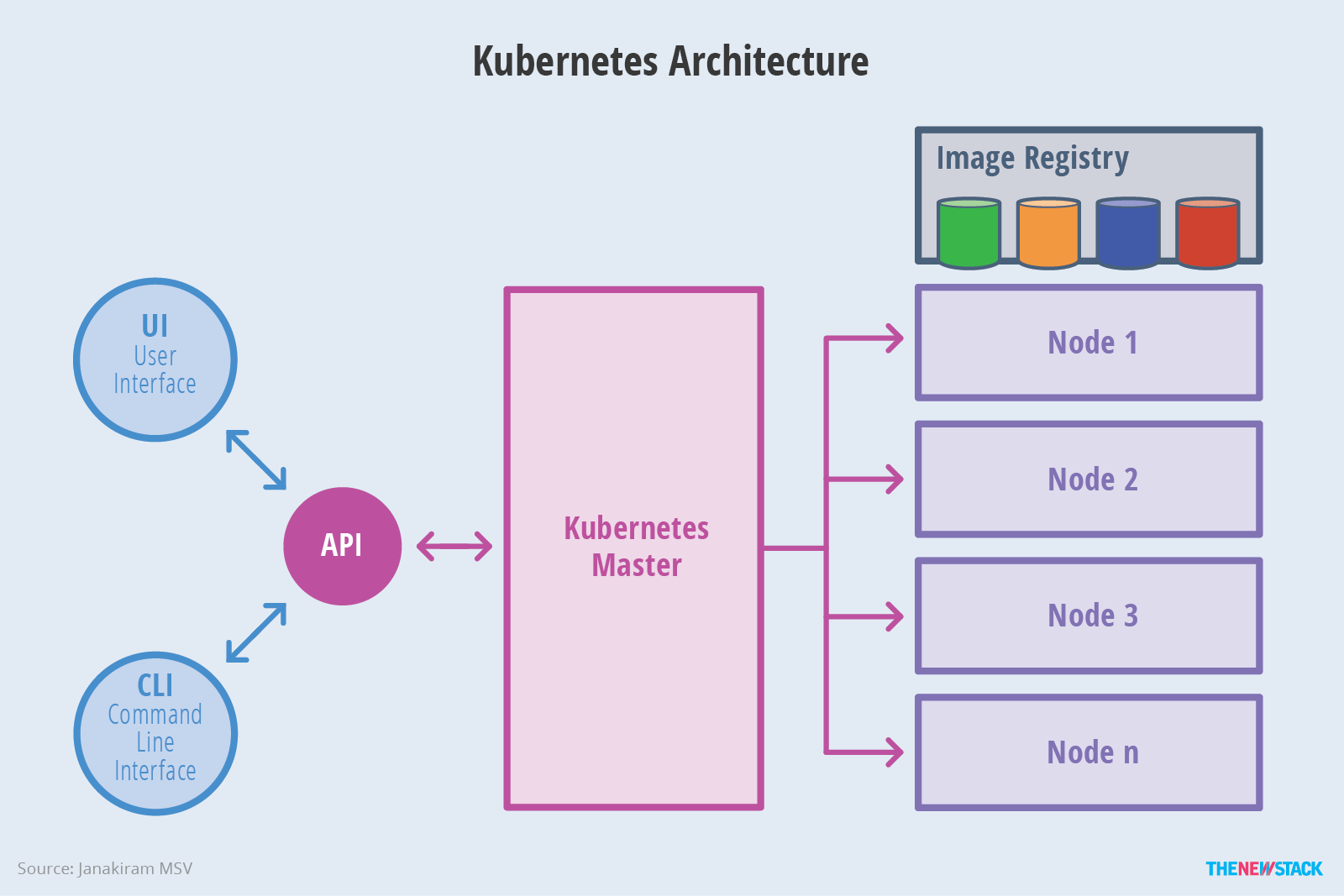

ApiServer

when first launch Kubelet, it will send the Bootstrapping request to kube-apiserver, which then verify the sent token is matched or not.

|

|

cluster IP

it’s the service IP, which is internal, usually expose the service name.

the cluse IP default values as following:

|

|

k8s in practice

blueKing is a k8s solution from TenCent. here is a quickstart:

create a task

add agnet for the task

run the task & check the sys log

create task pipeline (CI/CD)

create a new service in k8s

- create namespace for speical bussiness

- create serivces, pull images from private registry hub

|

|

how external access to k8s pod service ?

pod has itw own special IP and a lifecyle period. once the node shutdown, the controller manager can transfer the pod to another node. when multi pods, provides the same service for front-end users, the front end users doesn’t care which pod is exactaly running, then here is the concept of service:

service is an abstraction which defines a logical set of Pods and a policy by which to access them

service can be defined by yaml, json, the target pod can be define by LabelSeletor. a few ways to expose service:

ClusterIP, which is the default way, which only works inside k8s clusterNodePort, which use NAT to provide external access through a special port, the port should be among8400~9000. then in this way, no matter where exactly the pod is running on, when access*.portID, we can get the serivce.LoadBalancer

|

|

use persistent volume

access external sql

use volume

volume is for persistent, k8s volume is similar like docker volume, working as dictory, when mount a volume to a pod, all containers in that pod can access that volume.

- EmptyDir

- hostPath

- external storage service(aws, azure), k8s can directly use cloud storage as volume, or distributed storage system(ceph):

|

|

containers communication in same pod

first, containers in the same pod, the share same network namespace and same iPC namespace, and shared volumes.

- shared volumes in a pod

when one container writes logs or other files to the shared directory, and the other container reads from the shared directory.

![]()

- inter-process communication(IPC)

as they share the same IPC namespace, they can communicate with each other using standard ipc, e.g. POSIX shared memory, SystemV semaphores

![]()

- inter-container network communication

containers in a pod are accessible via localhost, as they share the same network namespace. for externals, the observable host name is the pod’s name, as containers all have the same IP and port space, so need differnt ports for each container for incoming connections.

![]()

basically, the external incoming HTTP request to port 80 is forwarded to port 5000 on localhost, in pod, and which is not visiable to external.

how two services communicate

- ads_runner

|

|

if there is a need to autoscale the service, check

k8s autoscale based on the size of queue.

- redis-job-queue

|

|

ads_runner can reach Redis by address: redis-server:6379 in the k8s cluster.

redis replication has great async mechanism to support multi redis instance simutanously, when need scale the redis service, it is ok to start a few replicas of redis service as well.

redis work queue

check redis task queue:

- start a storage service(redis) to hold the work queue

- create a queu, and fill it with messages, each message represents one task to be done

- start a job that works on tasks from the queue

refer

k8s: volumes and persistent storage

multi-container pods and container communication in k8s

k8s doc: communicate between containers in the same pod using a shared volume