mobility data center in ADAS/ADS

keywords: autosar, data factory, AI training, simulation, v&v, DevOps, IoT, adas applications

2~3 years ago, the top teams focus on perception, planning kinds of AI algorithms, which is benefited from the bursting of DNN, and lots invest goes there, and the optimism think once the best training model is founded, self-driving is ready to go.

then there was a famous talk about “long tail problems in AV” from Waymo team in 2018, the people realize to solve this problem, they need as many data as possible and as cheap as possible, which gives a new bussiness about data factory, data pipeline.

the investors realize the most cuting-edge AI model is just a small piece of done, there should be a data factory, which comes from MaaS serivces providers or traditional OEMs.

as data collector doesn’t exist common in traditional vehicles, so OEMs have to first make a new vehicle networking arch to make ADAS/ADS data collecting possible. which by the end, the game is back to OEMs.

at this point, IoT providers see their cake in AD market, OEMs may have a little understanding about in-vehicle gateway, t-box, but edge computing, cloud data pipeline are mostly owned by IoT providers, e.g. HuaWei and public data service providers, e.g. China Mobility. and the emerging of 5G infrastructure nationally also acc their share.

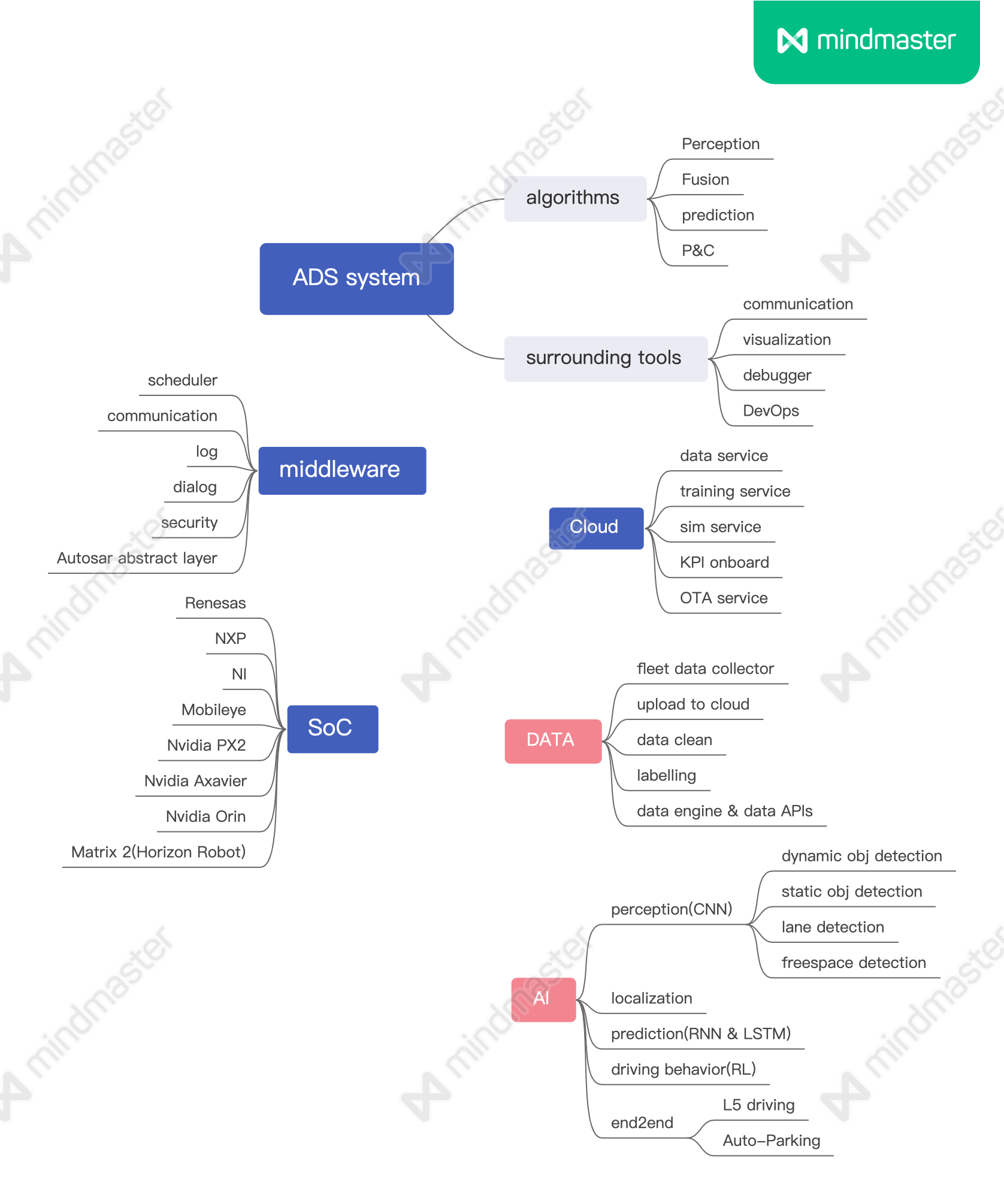

pipeline is one thing, the other is in-vehicle SoC, which has a few matured choices, such as Renasas, NXP, Mobileye, Nvidia Drive PX2/Xavier/Orin, and a bunch of new teams, such as horizon robotics, HuaWei MDC e.t.c

the traditionally definition of in-vehicle SoC has a minor underline about data pipeline and the dev tools around. but nowadays, taking a look at HuwWei MDC, the eco is so closed, from hardware to software, from in-vehicle to cloud. of course, the pioneer Nvidia has expand the arch from vehicle to cloud, from dev to validation already.

SoC is the source of ADS/ADAS data, which give the role of SoC as mobility data center(MDC), we see the totally mindset transfer from software define vehicle to data defined vehicle.

the mechanical part of the vehicle is kind of de-valued when thought vehicle just as another source of data on-line.

to maximize the value of data, the data serivces(software) is better decoupled from vehicle hardwares(ecu, controller), which is another trend in OEMs, e.g. autosar.

till now, we see the AI models, simulation, data services are just the tip of the iceberg. and this is the time we see self dirving as the integrated application for AI, 5G, cloud computing infra and future manufacturing. and the market is so large, no one can eat it all.

refer

Mobileye赖以成名的EyeQ系列芯片同样内嵌了感知算法,但其在出售产品时候,往往都是软硬件打包出售,并不会根据客户情况进行针对性修改,或是让客户的算法运行在自己的感知芯片上。但地平线则采用完全开放的理念,即可提供硬件、也可提供包括算法的整体方案,还给客户提供了名为天工开物的完整工具链,让客户自己对芯片上的算法进行调整优化。

Matrix2 (地平线)

mdc (huawei)

Drive PX 2 (nvidia)

DRIVE AGX Xavier (nvidia)

Orin (Nvidia)

nvidia lead the most safe standard

凭借我们自身在安全和工程方面的经验,NVIDIA已致力于领导欧洲汽车供应商协会(CLEPA)互联自动驾驶车辆工作组。NVIDIA在仿真技术和功能安全方面,拥有丰富的发展历史。我们的自动驾驶汽车团队在汽车安全和工程方面拥有宝贵的经验。

通过NVIDIA DRIVE Constellation这样的平台,制造商可以通过该平台对他们的技术进行长距离的驾驶测试,还可以设定在现实世界中很少遇到的罕见或危险测试场景

NVIDIA还与自动化与测量系统标准化协会(ASAM)合作。我们正在领导其中一个工作组,以定义创建仿真场景的开放标准,描述道路拓扑表示、传感器模型、世界模型,以及行业标准和关键性能指标,从而推进自动驾驶车辆部署的验证方法。

业界正在开发一套新标准——ISO 21448,被称为预期功能安全(SOTIF)。它旨在避免即使所有车辆部件都处于正常运行的状态,但依然有可能会引发风险的情况。例如,如果运行在车辆中的深度神经网络错误地识别了道路中的交通标志或物体,则即使软件没有发生故障也可能产生不安全的情况。

Drive OS

Drive AV(a variety of DNNs)

Drive Hyperion(AGX Pegasus, and sensors)

Drive IX

Drive Mapping

- Drive Constellation, a data center solution to test and validate the actual hardware/software in an AV car

data factory -> AI training ->

We also expand the use of our DNNs to support features like automatic emergency steering and autonomous emergency braking, providing redundancy to these functionalities

We also define key performance metrics to measure the collected data quality and add synthetic data into our training datasets

we incorporate actual sensor data from automatic emergency braking scenarios using re-simulation to help eliminate false positives.

NVIDIA created the DRIVE Road Test Operating Handbook to ensure a safe, standardized on-road testing process.

leetCode_swap_pairs

background

intuitive sol

|

|

why Hil MiL SiL and ViL

background

there are two very different teams in AD. one kind is people from top Tier1 and popular OEMs, the benefit of these guys is they are very matured at the product process management, e.g. V&V and the corresponding R&D process, the other pons of these guys, they have lots of engineering know-how/experience to make things work. mostly we know how the system/tool work, but compared to build the sytem/tool to work, the first knowledge is about 10%.

the mature R&D process is, as I see, is very valuable in a long time, which we’d say engineers getting richer as they getting older. till now, German and Janpanese top companies still have strong atmosphere to respect engineers, which keeps their engineers and their industry process management growing more and more mature. that’s a very good starting point for fresh young men, if they can join these top companies in early time.

the other team is from Internet companies, they are the kind of people with philosophy: as you can image out, I can build it up. while the philosophy always become true in IT and Internet service companies, and looks promising in industry fields, as the IT infra, like nowadays, service to cloud, office work in cloud, which derives lots of cloud infra, but the core like robot operation, making vehicle driving automatically, or computer aided engineering e.t.c. requires lots of know-how, far beyond the infra.

Internet teams are popular in China and US, but US still have strong engieer atmosphere like Germany, which is not in China. basically I want to say, there is no apprentice process to train fresh men to matured engineers in either companies or training organizations in China, which makes engineers here show low credit.

even Internet knowledge and skills in China, often we’d say programming work are youth meals, as programmer getting older they getting poor

that’s the tough situation for many young people, if the men got neither good engineering training, nor got smart and young enough to coding, he get doomed in his career. but that’s not a problem for the country neither for the companies, still Chinese workers are cheap, there are always lots of young people need bread beyond a promising career.

physical or virtual

the first-principal of writing a program:

- make it run ok

- make it run correct

- make it run performance-well

- make it extensible

the following is to understand why we need HiL, mil, sil, to ViL during verification and validation of an ADS product, from the physical or virtual viewpoint.

physical or virtual world

case 1) from physical world to physical sensor

namely, physical road test, either in closed-field, or open roads.

this is the case when we need ground truth(G.T.) system to validate the device under test(DUT) sensor, to evaluate or test the DUT’s performance boundary, failure mode e.t.c.

the people can come from sensor evaluation teams, or system integration teams.

case 2) from virtual world to physical sensor

the virtual world come either from digital twins, or replay from a data collector. this is hardware in loop(HiL) process, which used a lot in validate either sensor, or MCUs. when using HiL to validate MCUs, the virtual world is mimic signals e.t.c

virtual sensor

case 3) from virtual world to virtual sensor

virtual sensor has three kinds basically:

ideal (with noise) sensor model

statisticsly satisfied sensor model

physical sensor model

of course, the costing is increasing from ideal to physical sensor model.

ideally, the downside fusion module should be no senstive to sensors, either phyiscal sensors or virtual sensors(sensor models). the benefits of capturing virtual world by virtual sensor is so cheap, which makes it very good to training perception algorithms, when physical sensor data is expensive, which is often the reality for most companies now.

there is local maximum issues with virtual sensor data to train AIs, so in reality, most team used to mix 10% real-world sensor data to improve or jump out from these local maximums.

of course, virtual sensor is one fundamental element to close loop from virtual world to vehicle moving, in a sim env.

physical or virutal perception to fusion

here is how to test fusion, does it work correctly, performance well, how to handle abnormal(failure) case.

case 4) from physical sensor perception data to fusion

physical perception data comes in two ways:

- the sensor system in vehicle

- ground truth system(G.T.)

during RD stage, the sensor system in vehicle is treated as device under test(DUT), whose result can compare with the labeling outputs from G.T. system, which help to validate DUT performance, as well as to evaluate fusion performance.

in phyiscal world, ground truth sytem is usually equipped with a few higher precision Lidars, of course the cost is more expensive.

another pro of physical perception data is extract edge cases, which used to enrich the scenario libs, especially for sensor perception, and fusion.

during massive production stage, when the data pipeline from data collector in each running vehicle to cloud data platform is closed-loop, which means the back-end system can extract and aggregate highly volume edge case easily, then these large mount of physical perception data can drive fusion logsim.

question here, edge case scenarios are these abnormal perception/fusion cases, but how to detect edge cases from highly volume data in cloud(back-end), or to design the trigger mechanism in vehicle(front end) to only find out the edge cases, is not an matured work for most teams.

another question here, to evaluate fusion, requires objective ground truth, which is not avaiable in massive production stage. an alternative option is using a better performance and stable sensor as semi ground truth, such as Mobileye sensor.

case 5) from virtual sensor perception data to fusion

when in sim world, it’s easily to create a ground truth sensor, then it’s easily to check fusion output with the g.t. sensor, which is great, the only assuming here, is the sim world is highly vivid of the physical world. if not, the ground truth sensor is not useful, while obviously to build a digital twin as phyiscal as possible is not easy than create the ADS system.

on the other hand, if the sim world is not used to evaluate fusion, for example, used to generate synthetic images, point cloud to train perception AI modules, which is one benefit.

in summary, when evaluate and validate fusion, it requires ground truth labelling, either from physical g.t. system, or virtual g.t. sensor. 1) the g.t. system is only used for during R&D stage, with a small volumn of g.t. data; for massive release stage, there is no good g.t. source yet; 2) g.t. sensor in virtual world is easy to create, but requires the virtual worls is almost physical level.

second opnion, fusion evaluation is deterministic and objective. so if the fusion can validated well during RD stage, considering its performance robost, stable, there is no need to validate fusion module in massive lease. when perception/fusion edge case happens, we can study them case by case.

third opinon, for anormal case, e.g. sensor failure, sensor occupied cases, also need validate during RD.

planning

the evaluation of planning good or not is very subjective, while in fusion, ground truth is the criteria. so there are two sols:

- to make subjective goals as much quanlity as possible

- define RSS criterias to bound the planning results

ideally, planning should be not sensitive to fusion output from physical world, or virtual world. and when come to planning verification, we asumme the fusion component is stable and verified-well, namely should not delay find fusion bugs till planning stage.

case6) from sim fusion output to planning

SiL is a good way to verify planning model. previously, planning engineers create test scenarios in vtd, prescan, and check does the planning algorithms work.

for a matured team, there are maybe hundreds and thousands of planning related scenarios, the should-be verification process requires to regress on all these scenarios, so to automatically and accelerate this verification loop, gives the second solution: cloud SiL with DevOps, like TAD Sim from Tencent, Octopus from Huawei e.t.c.

another benefits of SiL in cloud is for complex driving behavior, real vehicle/pedestrains to vehicle interactions, and especially when the scenario lib is aggregating as the ADS lifecycle continues.

if the planning algorithm is AI based, then to mimic the real-human drivers driving behaivor and assign these driving behaviors to the agents in the virtual world, will be very helpful to train the ego’s planning AI model.

here are two models: imitation learning and reinforcement learning. firstly we train the agent/ego driving behaivor using physical world driving data by imitation learning in a training gym; and transfer these trained model to agents and ego in the sim world, they continue driving and interaction with each other, and keep training itself to do better planning.

for corner/edge cases, as planning is very subjective, and lots of long-tail scenarios are there, so physical world situations collection is very valuable.

case 7) from physical fusion output to planning

Tesla looks be the only one, who deployed the shadow mode trigging mechanism to get planning corner cases from physical driving, and the data close loop.

the large volumn of physical driving data is very useful for verification and iteration of planning:

aggregate more real planning scenarios, by detecting edge cases

train better driving behavior, as close as possible to humans

control

control is the process from planning output, e.g. acc, decel, turning-angle to physical actuator response, brake force, engine torque e.t.c

there are a few common issues :

nonlinear characteristic, mostly we don’t get a perfect control output as expect. e.g. from decel to brake force.

the actuator has response delay, which need professional engineers train to get a good balance, but still doesn’t work as expect at all the scenarios

the actuators as a whole is very complex, tuning requires.

case 8) from planning to virtual vehicle model

this is where sim requires a high-precise vehicle dynamic model, which affects the performance. but a simple vehicle dynamic does work for planing, if not requires to cosist with the physical performance.

case 9) from planning to physical vehicle

ViL, which is another big topic

find out ADS simulation trend from top teams

background

what’s the basic logic behind simulation in autonomous driving system(ADS) ? or why we need simulation in ADS, during AD development and even after massively produced ? how to integrate simulation in the AD closed-loop platform, namely from fleet/test data collection to valuable new features?

before I can answer these questions, a few steps has went through:

- L2 commerical sims

e.g. Matlab ADS box, vtd, prescan e.t.c.

- L3+ WorldSim

few years ago, most ADS teams get the knowledge: AD require at least 10 billion miles verification, which can’t be done in traditional test field. so comes digitial twins, game engine based simulator, e.g. LGsim, Carla, and Tencent, Huawei etc have already made these simulator as service in their public cloud. we also try LGsim, and deploy it in k8s env, that was a good time to learn, but not really helpful in practice.

- L2 LogSim

to assist L2 ADAS functions/algorithms/features iteration, as mentioned in system validation in l2 adas, most l2 adas features doesn’t necessarily depends on Logsim, standard benchmark test suit are enough most time.

of course, with more mount of LogSim, definitely can expose more bottleneck of the existing l2 adas features, but there is a balance between costing and profit. currently, large mount of physical data based L2 adas feature optimization is not an efficient invest for most OEMs.

the special case of l2 feature is false positive of AEB system, which actually requires statiscally measurement.

in a common development cycle, AEB system have about 5 major versions, and a few minor versions in each major version, so each version deployment need to check its false positive statically, which make logsim is a have-to.

another common usage of logsim is sensor algorithm development, e.g. Bosch Radar. before each new version of radar algorithm release, they have to check its performance statiscally through logsim.

LogSim is a very good starting point to integrate simulator into the ADS closed-loop platform, while we deal with data collection, clean, uploading, storage in db, and query through data engine, and DevOps to automatically trig to run, analysis and report.

- system verification and validation

simulation is a test pathway, that’s the original purpose of simulation. traditionally, new vehicle release has went through closed field test, which has changed during ADS age, as ADS system is open-loop, there is no test suit can do once and forever, so miles coverage and scenario coverage are the novel pathways in ADS validation, which leads to simulation.

- ADS regulation evalution

that’s the new trend as ADS getting popular, the goverment make rules/laws to set bars for new ADS players. one obligatory way is simulation.

thanks for China Automotive Research Institute(CARI) teams.

the following I’d share some understanding of simulation from top AD simulation teams:

QCraft

Our mission at QCraft is to bring autonomous driving into real life by using a large-scale intelligent simulation system and a self-learning framework for decision-making and planning.

QCraft was ex-waymo simulation team, has a solid understanding about simulation in AD R&D. the logic behind QCraft:

感知是一个比较确定性的问题,如何测试和评价是非常明确的,整体的方法论也是比较清楚的

规划决策视为目前最具挑战性的问题。第一,不确定性难以衡量。现有判断规划决策做得好坏的指标是舒适度和安全性;第二,从方法论的角度来说,行业里占主流位置的规划决策方法论,整体上看与20年前相比并没有大的突破。模仿学习或强化学习的方法,在大规模实际应用时也仍然存在众多问题

许多创业公司从无到有的技术构建过程——先做好建图和定位,再做好感知,最后再开始做规划决策和仿真。

this is a very good point, as most early start-ups focus on AI based perception or planning, but gradually people realize as no destructive break-through in AI tech, the AI model evaluation is too slowly, but collecting useful data is the real bottleneck. AI model is the most cheap thing when compare to data.

the other point, Predict & Planning(P&P) is the real challenging in L3+ ADS, when dealing with L2 adas features, P&P is knowlege based, which is a 20-years-old mindset. as P&P in L3+ requires an efficient way to test, then comes to simulation.

边界化难题(Cornercase),在你遇上野鸭子之前,你甚至不知道会有野鸭子的问题,所以边界化难题是需要有方法去(自动)发现新的corner case,并解决。

除了收集大量的数据,更重要的是建立自动化生产的工厂,将源源不断收集来的有效数据,通过自动化的工具,加工成可用的模型。以更快的速度、更高效的方式应对边界化难题(Corner case)。

测试工具是为了帮助工程师高效地开发,快速复现车辆上的问题,并提前暴露可能的潜在问题,同时也是提供一个评估系统,评价一个版本和另外一个版本比是变好了还是变坏了。

我们的测试系统可做到和车载系统的高度一致,在路上出现的问题,回来就能在仿真里复现,并进行修复。保证再次上路时不出现同样问题。我们产生的场景库也与现实环境高度一致,因为本来就是从现实中学习来的

轻舟智航不希望“只见树木不见森林”——通过见招拆招的方式进入到某个具体的小应用场景,变成一家靠堆人来解决问题、无法规模化的工程公司,

in a word, to build a closed-loop data platform to drive ADS evolution automatically or self-evolution, that’s the real face of autonomous vehicle.

QCraft: 实现无人驾驶需要什么样的仿真系统

- 基于游戏引擎开发的仿真软件大都“华而不实”

仿真软件在自动驾驶领域的重要应用,就是复现(replay)某一次的路测效果。但由于这种第三方软件的开发与自动驾驶软件的开发是相互独立的,很难保证其中各个模块的确定性,导致整个仿真软件存在不确定性,最终影响可用性。

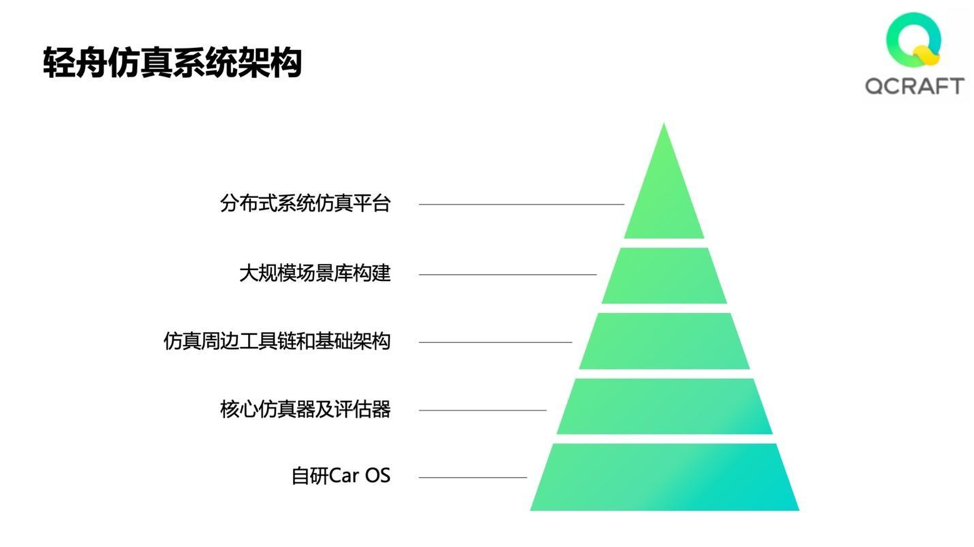

轻舟智航仿真系统的系统架构可以分为5层:最底层的是轻舟智航自研的Car OS,借助底层的通讯系统来保证模块之间的高效通讯; Car OS与仿真器是高度整合的系统,核心仿真器及评估器,是基于底层的Car OS接口开发的,能保证仿真系统的确定性;再往上一层是仿真周边工具链和基础架构,可保证整个数据闭环的有效性,将全部数据高效利用起来;第四层是大规模场景库构建;最顶层则是分布式系统仿真平台,支持快速、大规模的仿真应用,在短时间内得出正确评估。

仿真场景库的自动生成的相关工作。视频中红色和绿色的两个点,分别代表两辆车的运动轨迹,这些轨迹的生成和变化,是在真实的交通数据集上,利用深度学习的方法进行训练,再使用训练好的深度神经网络 (生成模型) 合成大规模的互动车辆的轨迹

我们认为仿真是达到规模化无人驾驶技术的唯一路径。首先,借助仿真及相关工具链,能形成高效的数据测试闭环,支持算法的测试和高效迭代,取代堆人或堆车的方式;其次,只有经过大规模智能仿真验证过的软件,才能够保证安全性和可用性。

in a word, this is a very ambitious plan, from carOS to cloud end, the whole data pipeline, from fleet data collection to model training, to new features release. the missing part is who would pay for it and the most evil part is who will pay for the large mount of engineering work, which is not something feeling high for PhDs.

Latent Logic

another waymo simulation startup, offers a platform that uses imitation learning to generate virtual drivers, motorists and pedestrians based on footage collected from real-life roads. Teams working on autonomous driving projects can insert these virtual humans into the simulations they use to train the artificial intelligence powering their vehicles. The result, according to Latent Logic, is a closer-to-life simulated training environment that enables AI models to learn more efficiently.

training autonomous vehicles using real-life human behaviour

‘Autonomous vehicles must be tested in simulation before they can be deployed on real roads. To make these simulations realistic, it’s not enough to simulate the road environment; we need to simulate the other road users too: the human drivers, cyclists and pedestrians with which an autonomous vehicle may interact.’

‘We use computer vision to collect these examples from video data provided by traffic cameras and drone footage. We can detect road users, track their motion, and infer their three-dimensional position in the real world. Then, we learn to generate realistic trajectories that imitate this real-life behaviour.’

in a word, Latent Logic is dealing with P&P training in L3+ ADS, which is the real challenging.

Roman Roads

using imitation learning to train robots to behave like human, state of the art behavioral tech.

We offer R&D solutions from 3D environment creation, traffic flow collection to testing & validation and deployment.

- driving behavior data collection

ego view collection, fleet road test

- real-time re-construction

real time generation of 3d virtual env and human behavior(imitation learning)

- data-driven decision making

- pre-mapping

The behavior offset are different between two cities. We collect data, like how people change lane and cut in, and learn the naturalistic behaviors at different cities.

in a word, still a very solid AI team, but who would pay for it and how to get valuable data, or integrate into OEM’s data platform, is the problem.

Cognata

the customer includes Hyundai Mobis. Cognata delivers large-scale full product lifecycle simulation for ADAS and Autonomous Vehicle developers.

training

Automatically-generated 3D environments, digital twins or entirely synthetic worlds by DNN, synthetic datasets, and realistic AI-driven traffic agents for AV simulation.

validation

pre-built scenarios,

standard ADAS/AV assessment programs

fuzzing for scenario variaties for regulations and certifications

UI friendly

analysis

Ready-to-use pass/fail criteria for validation and certification of AV and ADAS

Trend mining for large scale simulation

visulization

how it works

static layer: digital twin

dynamic layer: AI powered vehicles and pedestrains

sensor layer: most popular sensor models based on DNN

cloud layer: scalability and on-promise.

Cognata as an independent simulation platform is so good, but when considering integration with exisitng close loop data platform in OEMs, it’s like a USA company, too general, doesn’t wet the shoe deeply, compare to QCraft, whose architecture is from down to top, from car OS to cloud deployment, which is more reliable solution for massively ADAS/AD products in future.

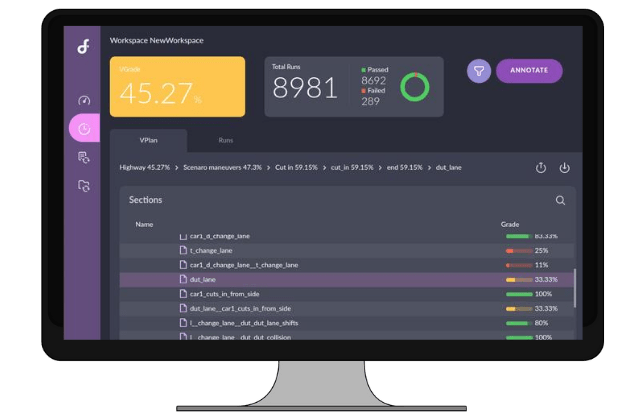

applied intuition

another Waymo derived simulation company. Test modules individually or all together with our simulation engine that’s custom built for speed and accuracy.

improve perception algorithms, Compare the performance of different stack versions to ground truth data. Test new behaviors and uncover edge cases before pushing updates to your vehicles.

Extract valuable data from real world drives and simulations

Review interesting events recorded from vehicles and simulations to determine root cause issues. Share the data with other team members for further analysis and fixes.

Extract and aggregate performance metrics from drive logs into automatic charts, dashboards, and shareable reports for a holistic view of your development progress.

Run your system through thousands of virtual scenarios and variations to catch regressions and measure progress before rolling it out for on-road testing.

in a word, looks pretty.

metamoto

simulation as a service, Enterprise products and services for massively scalable, safe, scenario-based training and testing of autonomous system software

it’s an interet based simulator compare to preScan, but in core is just another preScan, with scalability. can’t see data loop in the arch. so it’s helpful during R&D, but not realy usefuly after release.

Parallel Domain

power AD with synthetic data, the ex-Apple AD simulation team.

Parallel Domain claims its computing program will be able to generate city blocks less than a minute, Using real-world map data. Parallel Domain will give customers plenty of options for fine tuning virtual testing environments. The simulator offers the option to incorporate real-world map data, and companies can alter everything from the number of lanes on a simulated road to the condition of its computer-generated pavement. Traffic levels, number of pedestrians, and time of day can be tweaked as well.

Nio is the launch customer of Parallel Domain

PD looks to train AD in virtual reality

from real map to virtual world, and road parameters are programable. but what about micro traffic flow, vehicle-pedestrain-cars interaction ?

I think it’s great to generate virtual world with Parallel Domain, but not enough as the simulator in the whole close loop.

the collected data, of course include real map info, which can used to create virtual world, but why need this virtual world? is to train AD P&C system, which is more than just the static virtual world and with some mimic pedestrain/vehicle behaviors.

in AI powered AD, valid and meaningful data is the oil. the basic understanding here is to with more data, get more robost and general AI model, which means with more data, the AI AD system can do behavior better with existing scenarios, and more importantly, do increase the ability to handle novel scenarios automatically.

so is the close loop data in AD.

righthook

digital twin of real world

scenario creation tool powered by AI

to derive high-value permuation and test cases automatically(maybe both static and dynamic scenarios)

vehicle configuration

- test management

integration with DevOps and cloud on-premise

in a world, this is something similar like Cognata, to generate vivid world from physical map data or synthetic data, then add imitation learning based agents, then a DevOps tool and web UI configurable.

the most important and also the difficult part of this pipeline, is how to obtain large mount of valid and useful real data as cheap as possible, to train the scenario generator, as well as agents behavior generator.

only MaaS taxi companies and OEMs have the data.

rfpro

a driving simulation and digital twin for ad/adas, vd(chassis, powertrain e.t.c) development, test and validation.

rFpro includes interfaces to all the mainstream vehicle modelling tools including CarMaker, CarSim, Dymola, SIMPACK, dSPACE ASM, AVL VSM, Siemens Virtual lab Motion, DYNAware, Simulink, C++ etc. rFpro also allows you to use industry standard tools such as MATLAB Simulink and Python to modify and customise experiments.

rFpro’s open Traffic interface allows the use of Swarm traffic and Programmed Traffic from tools such as the open-source SUMO, IPG Traffic, dSPACE ASM traffic, PTV VisSim, and VTD. Vehicular and pedestrian traffic can share the road network correctly with perfectly synchronised traffic and pedestrian signals, while allowing ad-hoc behaviour, such as pedestrians stepping into the road.

data farming: generating mimic training data

the largest lib of digital twins of public roads in the world

supervised learning env for perception

in a word, looks like a traditionally tier2.

edge case research

Hologram

complements traditional simulation and road testing of perception systems

Hologram unlocks the value in the perception data that’s collected by your systems. It helps you find the edge cases where your perception software exhibits odd, potentially unsafe behavior.

from recorded data, to automated edge case detection(powered by AI), The result: more robust perception

most of the cost of developing safety-critical systems is spent on verification and validation.

in a word, safety is a traditional viewpoint, and most AI based teams doesn’t have solid understanding. but how ECR ca

foretellix

out of box verification automation solution for ADAS and highway functions, developed based on input from OEMs, regulators and compliance bodies.

Coverage Driven Verification, Foretellix’s mission is to enable measurable safety of ADAS & autonomous vehicles, enabled by a transition from measuring ‘quantity of miles’ to ‘quality of coverage’

- what to test ?

Industry proven verification plan and 36 scenario categories covering massive number of challenges and edge cases

- When are you done?

Functional coverage metrics to guide completeness of testing

this is ADAS L2 V&V solution, mostly in functional metric test.

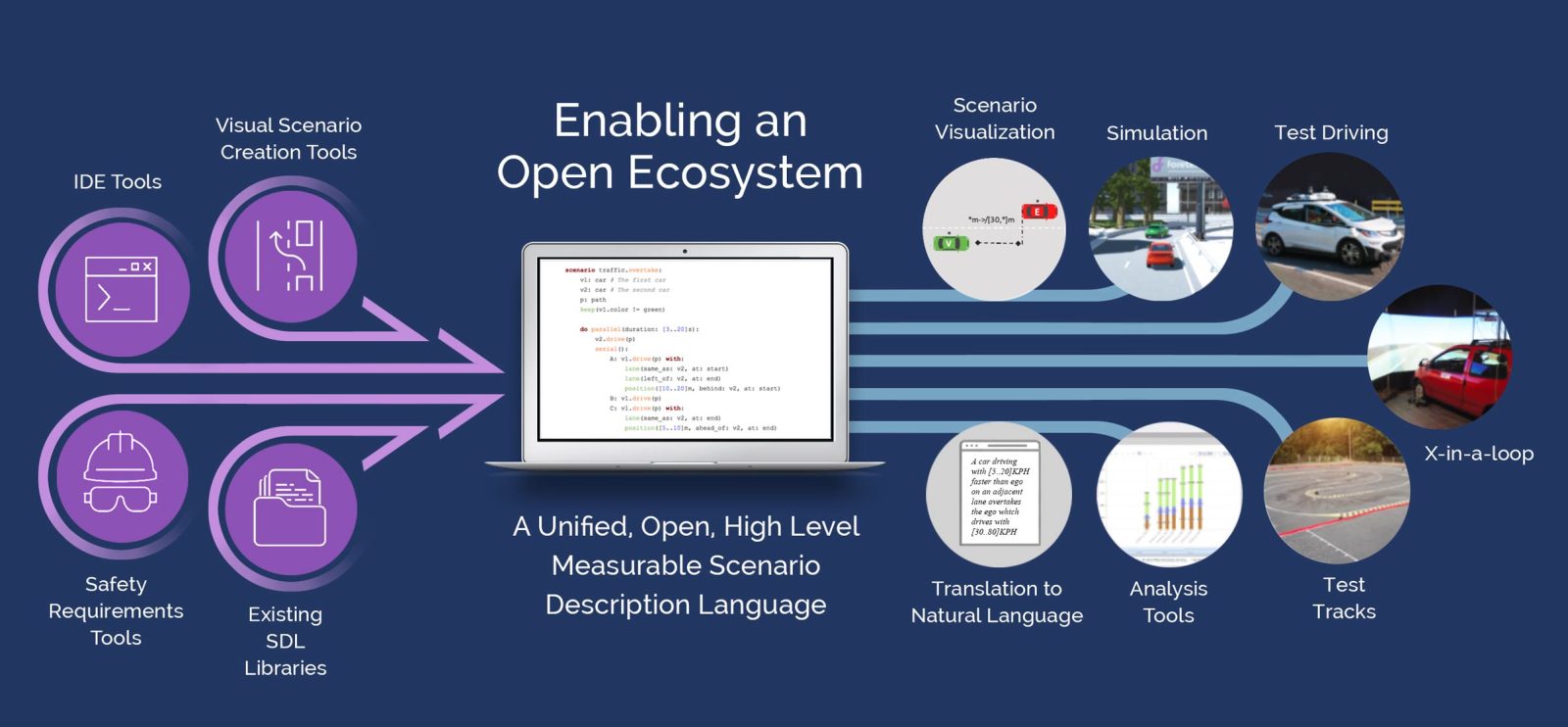

Open Language: M-SDL

M-SDL is an open, human readable, high level language that allows to simplify the capture, reuse and sharing of scenarios, and easily specify any mix of scenarios and operating conditions to identify previously unknown hazardous core & edge cases. It also allows to monitor and measure the coverage of the autonomous functionality critical to prove ADAS & AV safety, independent of tests and testing platforms.

atlatec

HD map for simualtion, digitial twin of real road, integrated well with simulation suppliers, e.g. IPG, Vires, Prescan.

ivex

provides qualitatively safety assessment of planning and decision making for all levels of ADs during development.

safety assessment tool

what is safety requirements during AD development ?

what’s the KPIs to represent these safety requirements ?

is safety requirements iterative in each functional iteration, or done once for ever ?

safety requirements can be validate before release, after then, when new corner cases detected, need to do safety validate automatically. so in this way, safety and function should keep in the same step.

the ability of safety assessment tool :

unsafe cases and decision detection from a large amount of scenarios

a qualitative metric of safety (RSS model)

statistical analysis of risk and safety metrics

safety co-pilot

guarantee safety of a planned trajectory.

The safety co-pilot uses the safety model to assess whether (1) a situation is safe, and (2) a planned trajectory for the next few seconds can be considered as safe, accounting for predicted movements of other objects and road users.

in a word, safety is big topic, but I can’t see the tech behind how Ivex solve it.

nvidia

intel

summary

from the top ADS simulation teams, we see a few trends:

simulation driven P&P iteration

simulation driven V&V

can simulation be an independent tool, or it is customized and in-house services ?

maybe like CAE ages, in the early time, Ford/GM have their own CAE packages, later CAE tools are maintained by external suppliers. when ADS simulation is matured, there maybe few independent ADS simulation companies, like Ansys, Abaqus in CAE fields.

or a totally differenet story I can’t image here.

refer

system validation in l2 adas

background

from system requirements, the functional models can be created. the bottom line of ADAS is safety, which defines the safety requirements. during ADAS system validation, both function modules and safety modules should be covered.

my experience lay in SiL a lot. a question recently is how much percentage between SiL and HiL is a good choice?

a few years early, SiL, especially world-sim, e.g. LGSVL, Carla are highly focused. the big companies said, to achive a L3+ autonmous driving system, there needs at least 10 billion miles driving test, which is almost impossible in traditional vehicle desing lifecycle, which gives a life for virtual sim driving validation.

which even not consider the iteration of ADAS algorithms. as if there is no mechanism to gurantee the previous road data is valid to validate the new version of algorithms, they have to collect another 10 billion miles for each algorithm version iteration.

but now most OEM choose the gradually evaluation way from ADAS to AD. during ADAS dev and test, Bosch has mature solutions, they don’t need 10 billion miles to validate AEB, ACC, LK kind of L2 ADAS functions, usually 1500 hrs driving data is enough, and they have ways to re-use these driving hrs as much as possible during their ADAS algorithms iteration.

I am thinking the difference is about function-based test and scenario-based test.

ADAS functions always have a few limited if-else internally and state machines in system-level. for function-based test, to cover all these if-else logic and state machines jumping, with additional safety/failure test, the ADAS system can almost gurantee to be a safe and useful product.

there is no need to cover all scenarios in the world for L2 ADAS system. of course, once someone did find out all physical scenarios in the world, they can define new ADAS features. and add these new features to their products.

in a word, L2 ADAS is a closed-requirement system, the designers are not responsible for any cases beyond the designed features.

For L3 AD system, the designers is responsible for any possible scenarios, which is a open-requirements system, there is no gurantee the design features is enough to cover all scenarios in real world, so which need scenario-based test and keep evaluating through OTA.

For L2 ADAS, OTA is not a have-to option in some way, but L3 ADAS has to OTA frequently.

OTA for l2 adas is to improve the performance of known features, through analysis of big driving data, but it doesn’t discovery novel features.

OTA for l3 adas need to improve the performance of known features, as well as to discover new features as much as possible and as quick as possible.

which of course bring requirement of OEM’s data platform. Tesla is currently the only OEM, who can close the data loop, from customer’s driving data, to OEM’s cloud, and OTA new/better features back to customers.

there is no doubt, Tesla evaluation is scenario-based. collecting scenarios is not only for validate existing systems, or test purpose, but more for discover new features, e.g. from lane/edestrain/vehicle detection to traffic light detection e.t.c.

the traditional OEMs still don’t know how to use scenario data valuablely, only image the collected data can do validate or calibrate a better performance known features.

ADAS HiL

HiL helps to validate embedded software on ECUs using simulation and modeling teches. at the bottom of V model, there are software dev and test, the upper right is software/hardware integration test, namely HiL.

as I understand, the purpose of HiL is software and hardware integration test, and there are two main sub validation: safety requirements, and function requiements.

to validate safety in HiL, fault injection is used. safety module is very bottom-line, and any upper functional module is rooted from safety module. in a narraw view, fault injection only focus on known or system-defined faults, which is finite cases; in a wide view, any upper functional cases can reach to a fault injection test case, which is almost unlimited.

in the narraw view, coverage of functions(both function internnal state machine and across-function jump trig mechanism) is iterable, even though its total size may be hundreds of thousands.

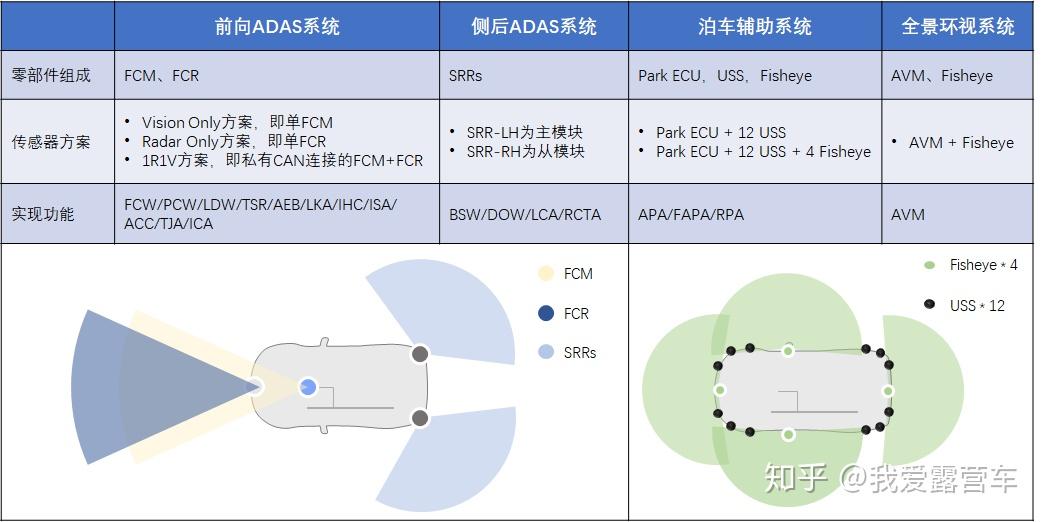

ADAS massively production solution

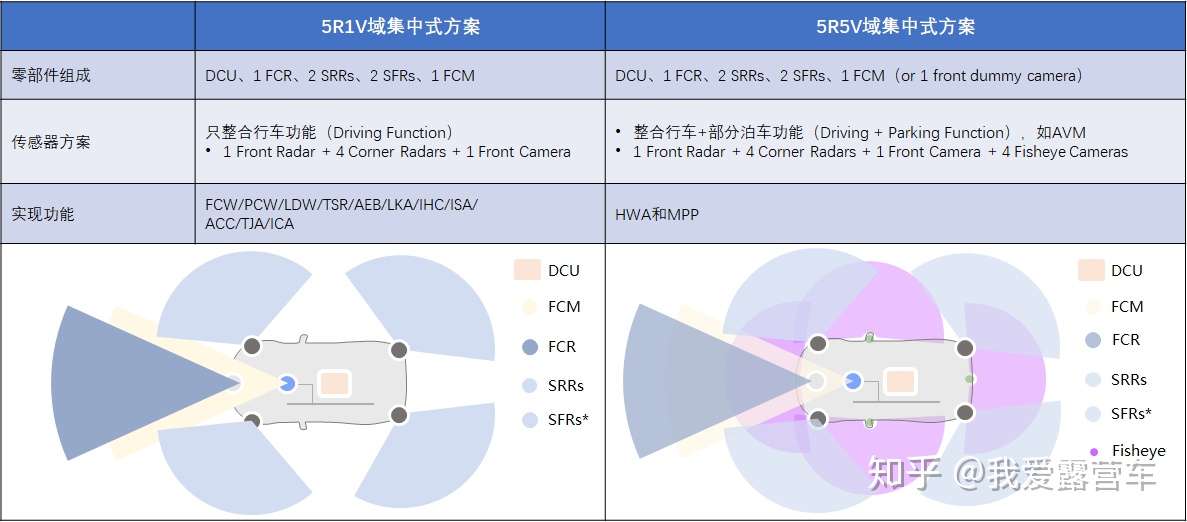

1R1V(3R1V)

Level 2 ADAS system, some OEMs called it as highway Assist(HWA), mostly provided by Tier1, e.g. Bosch.

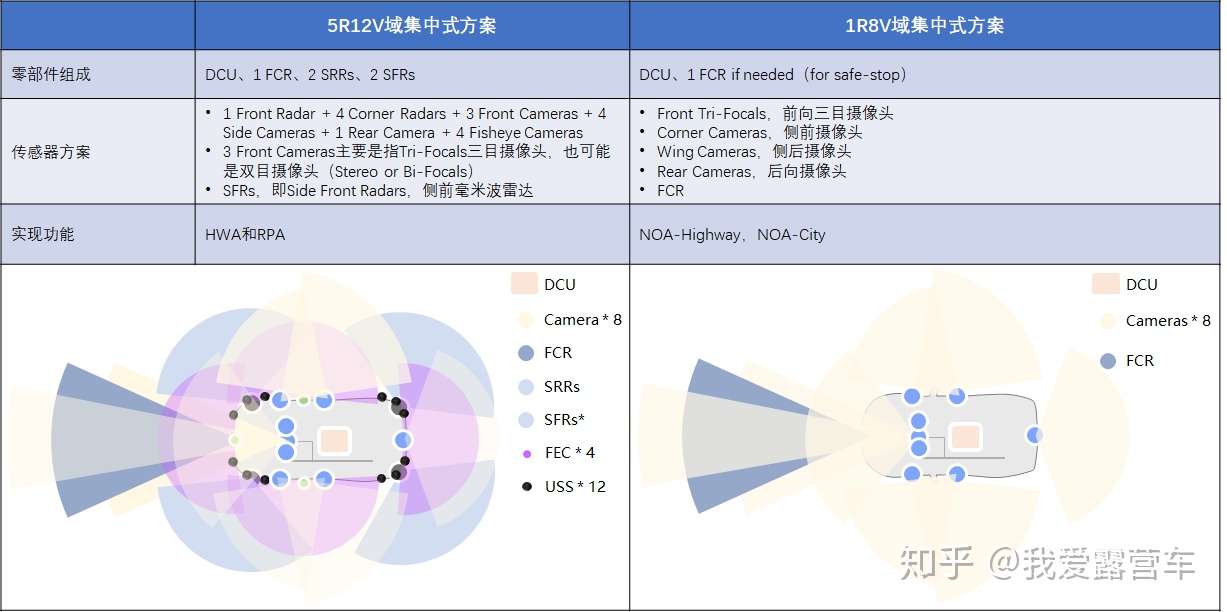

5R1V(5R5V)

Level 2+ ADAS system, some OEMs called it as HighWay Pilot(HWP), hidden meaning it’s L3, but in fact, not a fully L3.

8R1V(5R12V)

camera-priority solution, used in Tesla and Mobileye.

L2 ADAS system validation

from traditional vehicle system test and validation, there are finite cases, e.g. ESP interfaces. for ADAS system validation, which is scenario based, and mostly there are infinite cases.

there are different ways to cover ADAS system validation:

- fault injection test, the vehicle powertrain, ADAS sensors, ADAS SoC/MCU, all these HW has random probability to fail.

Fault Injection

fault injection tests is used to increase the test coverage of Safety Requirements. Within the fault injection tests arbitrary errors are introduced into the system to proof the safety mechanisms that have been encoded in the software to examine. fault injection tests is only a subset of Requirements based testing.

ISO 26262-4 [System] describes the Fault Injection Test as follows:

The test uses special means to introduce faults into the item. This can be done within the item via a special test interface or specially prepared elements or communication devices. The method is often used to improve the test coverage of the safety requirements, because during normal operation safety mechanisms are not invoked.

The ISO 26262-4 [System] defines the fault injection test as follows: Fault Injection Test includes injection of arbitrary faults in order to test safety mechanisms (e.g. corrupting software or hardware components, corrupting values of variables, by introducing code mutations, or by corrupting values of CPU registers). arbitrary faults is actually from a known list of errors.

fault injection is good at testing known sorts of bugs or defect, but poor at testing novel faults or defects, which are precisely the sorts of defects we would want to discover. therefore, fault injection in ADAS is to verify the designed safety mechanism/responses.

to verfication of the system. if an ADAS system is designed to tolerate a certain class of faultss, then these faults can be directly injected into the system to examine their responses. for errors which is too infrequent to effectively test in the field, fault injection is powerful to acclerate the occurence of faults.

in summary, fault injection is more verification tool, rather than a tool to improve performance or find novel design faults.

software verification and validation

verification is about whether there is the functions as required. validation is about how good the functions are as required. verfication tech includes: dynamic testing and static testing. e.g. funcitonal test, random test; validation tech includes: (h/sw) fault injection, dependency analysis, hazard analysis, risk analysis e.t.c.

the software verification is usually a combination of review, analyses, and test. review and analyses are performed on the following components:

- requirement analysis

- software arch

- source code

- outputs of integration process

- test cases and their procedures and results

for test is to demonstrate it satisfies all requirements and to demonstrate that errors leading to unacceptable failure conditions has safe strategies. usually including:

- h/sw integration test: to verify the software is operating correctly in the hardware

- software integration test

- low-level test

CANape

measurement

device plugin into CANape box under a special channel group

the signals need recorded

triger strategy to start recording

calibration

visulization

CANoe

refer

ADAS/AD dev: L2+ ADAS/AD sensor arch

ADAS/AD dev: SoC chips solution

ADAS/AD dev: massive production solutions

using Fault Insertion Units for electronic testing

Fault injection test in ISO 26262 – Do you really need it

paper: Fault Injection in automotive standard ISO 26262: an initial approach

verfication/validation/certification from CMU EE

integrated ADAS HiL system with the combination of CarMaker and various ADAS test benches

adas data logging solution know how

Data replay offers an excellent way to analyze driving scenarios and verify simulations based on real-world data. This can be done with HIL systems as well as with data logging systems that offer a playback mechanism and software to control the synchronized playback. Captured data can be replayed in real time or at a slower rate to manipulate and/or monitor the streamed data.

An open, end-to-end simulation ecosystem can run scenarios through simulations via a closed-loop process.

overcoming logging challenges in ADAS dev

pdf: ada logging solution from Vector

confidently record raw ADAS sensor data for drive tests from NI

online and offline validation of ADAS ECUs

pdf: data recording for adas development from Vector

pdf: time sync in automotive Ethernet

pdf: QNX platform for ADAS 2.0

time sync in modular collaborative robots

pdf: solving storage conundrum in ADAS development and validation

validation of ADAS platforms(ECUs) with data replay test from dSPACE

date driven road to automated driving

open loop HiL for testing image processing ECUs

github: open simulation interface

autosar know-how

the following are knowledges from Internet, if copyright against, please contact me.

autosar: 微控制器抽象层 MCAL

微控制器驱动

GPT Driver

WDG Driver

MCU Driver

Core test

存储器驱动

通信驱动

Ethernet驱动

FlexRay 驱动

CAN 驱动

LIN 驱动

SPI 驱动

I/O驱动

AUTOSAR:基础软件层

AUTOSAR软件体系结构包含了完全独立于硬件的应用层(Application Layer)和与硬件相关的基础软件层(BasicSoftware,BSW),并在两者中间设立了一个运行时环境(Run Time Environment),从而使两者分离,形成了一个分层体系架构。

基础软件层组件

系统,提供标准化(os, timer, error)规定和库函数

内存,对内、外内存访问入口进行标准化

通信,对汽车网络系统、ECU间、ECU内的通信访问入口进行标准化

I/O, 对传感器、执行器、ECU的IO进行标准化

服务层与复杂驱动

系统服务

比如, os定时服务、错误管理。为应用程序和基础软件模块提供基础服务。

存储器服务

通信服务

通信服务通过通信硬件抽象与通信驱动程序进行交互

复杂驱动

复杂驱动(CCD)层跨越于微控制器硬件层和RTE之间,其主要任务是整合具有特殊目的且不能用MCAL进行配置的非标准功能模块。复杂驱动程序跟单片机和ECU硬件紧密相关。

Autosar time sync

11 Time sync

对于自适应平台,考虑了以下三种不同的技术来满足所有必要的时间同步需求:

经典平台的StbM

库chrono -要么std::chrono (c++ 11),要么boost::chrono

时间POSIX接口

TBRs充当时间基代理,提供对同步时间基的访问。通过这样做,TS模块从“真实的(real)”时基提供者中抽象出来。

autoSar: time sync protocol specification

Precision Time Protocol (PTP)

generalized Precision Time Protocol (gPTP)

Time Synchronization over Ethernet, IEEE802.1AS

time master: is an entity which is the master for a certain Time Base and which propagates this Time Base to a set of Time Slaves within a certain segment of a communication network. If a Time Master is also the owner of the Time Base then he is the Global Time master.

time slave: is the recipient for a certain Time Base within a certain

segment of a communication network, being a consumer for this Time Base

time measurement with Switches:

in a time aware Ethernet network, HW types of control unit exists:

Endpoints directly working on a local Ethernet-Controller

Time Gateways, time aware bridges, where the local Ethernet-Controller connects to an external switch device. A Switch device leads to additional delays, which have to be considered for the calculation of the corresponding Time Base

specification of time sync over Ethernet

Global Time Sync over Ethernet(EthTSyn) interface with:

Sync time-base manager(StbM), get and set the current time value

Ethernet Interface(EthIf), receiving and transmitting messages

Basic Software Mode Manager(BswM), coord of network access

Default Error Tracer(DET), report of errors

A time gateway typically consists of one Time Slave and one or more Time

Masters. When mapping time entities to real ECUs, an ECU could be Time Master (or even Global Time Master) for one Time Base and Time Slave for another Time Base.

time sync in automotive Ethernet

The principal methods for time synchronization in the automotive industry are currently based on AUTOSAR 4.2.2, IEEE 802.1AS, and the revised IEEE 802.1AS-ref, which was developed by the Audio/Video Bridging Task Group, which is now known as the TSN (Time Sensitive Networking) Task Group.

The type of network determines the details of the synchronization process. For example, with CAN and Ethernet, the Time Slave corrects the received global time base by comparing the time stamp from the transmitter side with its own receive time stamp. With FlexRay, the synchronization is simpler because FlexRay is a deterministic system with fixed cycle times that acts in a strictly predefined time pattern. The time is thus implicitly provided by the FlexRay clock. While the time stamp is always calculated by software in CAN, Ethernet allows it to be calculated by either software or hardware

mcu know-how

basic knowledge

SPI protocol

SPI, Serial Peripheral Interface。使MCU与各种外围设备以串行方式进行通信以交换信息。

SPI总线可直接与各个厂家生产的多种标准外围器件相连,包括FLASHRAM、网络控制器、LCD显示驱动器、A/D转换器和MCU等。

SPI接口主要应用在EEPROM、FLASH、实时时钟、AD转换器, ADC、 LCD 等设备与 MCU 间,还有数字信号处理器和数字信号解码器之间,要求通讯速率较高的场合。

TAPI protocol

TAPI(电话应用程序接口)是一个标准程序接口,它可以使用户在电脑上通过电话或视频电话与电话另一端的人进行交谈。TAPI还具备一个服务提供者接口(SPI),它面向编写驱动程序的硬件供应商。TAPI动态链接库把API映射到SPI上,控制输入和输出流量。

MCU timing

Software design is easy when the processor can provide far more computing time than the application needs. in MCU, the reality is often the opposite case. the following are some basic know-how, before we can jump into ADAS MCU/SOC issues.

scheduling sporadic and aperiodic events in a hard real-time system

A common use of periodic tasks is to process sensor data and update the current state of the real-time system on a regular basis.

Aperiodic tasks are used to handle the processing requirements of random events such as operator requests. An aperiodic task typically has a soft deadline. Aperiodic tasks that have hard deadlines are called sporadic tasks.

Background servicing of aperiodic requests occurs whenever the processor is idle (i.e., not executing any periodic tasks and no periodic tasks are pending). If the load of the periodic task set is high, then utilization left for background service is low, and background service opportunities are relatively infrequent. However, if no aperiodic requests are pending, the polling task suspends itself until its next period, and the time originally allocated for aperiodic service is not preserved for aperiodic execution but is instead used by periodic tasks

foreground-background scheduling

periodic tasks are considered as foreground tasks

sporadic and aperiodic tasks are considered as background tasks

foreground tasks have the highest priority and the bc tasks have lowest priority. among all highest priority, the tasks with highest priority is scheduled first and at every scheduling point, highest priority task is scheduled for execution. only when all foreground tasks are scheduled, bc task are scheduled.

completion time for foreground task

for fg task, their completion time is same as the absolute deadline.

- completion time for background task

when any fg task is being excuted, bc task await.

let Task Ti is fg task, Ei is the amount of processing time required over every Pi period. Hence,

|

|

if there are n periodic tasks(fg tasks), e.g. T1, T2, … Tn

then total avg CPU utilization for fg taskes:

|

|

then the avg time available for execution of bg task in every unit of time is:

|

|

let Tb is bg task, Eb is the required processing time, then the completion time of bg task:

|

|

in ADAS system, usually there exist bost periodic tasks(fg) and aperiodic tasks(bc), and they are corelated, fg tasks always have higher priority than bc tasks. so there is chance when a certain fg task rely on output of another bc task, which is not or partially updated due to executing priority, then there maybe some isues.

refer

Vector: Functional safety and ECU implementation

verfication and validation in ADAS dev

Verification and Validation in V-model

verification

- whether the software conforms the specification

- finds bugs in dev

- output is software arch e.t.c

- QA team does verification and make sure the software matches requirements in SRS.

- it comes before validation

validation

- it’s about test, e.g. black box test, white box test, non-functional test

- it can find bugs which is not catched by verification

- output is an actual product

V-model is an extension of waterfull model. The phases of testing are categorised as “Validation Phase” and that of development as “Verification Phase”

how simulation helps ?

- for validation to cover known and rara critical scenarios.

- for verification to test whether the component function as expected

model based to data driven

| methodlogy | completeness | known | 0-error-gurantee | iterable | example |

|---|---|---|---|---|---|

| model based | 1 | 1 | 1 | 0 | NASA |

| data driven | 0 | 0 | 0 | 1 | Tesla Motor |

with traditionally vehicle/airspace development, the system team first clearly define all requirements from different stakeholders and different departments, which may take a long time; then split to use cases, which is garanteed to be complete and all-known set, and garantee zero-error, the engineers just make sure each new development can meet each special verification. the general product design cycle is 3 ~ 5 years, and once it release to market, there is little chance to upgrade, except back to dealer shops.

model based mindset need to control everything at the very beginning, to build a clear but validatable super complex system at very first, even it takes long long time. and the development stage can be a minor engineering process with a matured project driven management team.

the goods of model based mindset is it strongly gurantee known requirements(including safety) is satisfied, while the drawback is it lose flexibility, slow response to new requirements, which means lose market at end.

to validate model based design ADS system, usually it highlights the full-cover or completeness of test cases, which usually can be pre-defined in semantic format(.xml) during system requirement stage. then using a validation system(e.g. a simulator) to parse the semantic test cases, and produce, run and analysis the test scenarios automatically.

in data driven ADS system, the system verification and validation strongly depends on valid data.

1) data quantity and quality. as the data set can cover as many as plenty kinds of different scenarios, it’s high qualitied; while if the data set have millions frames of single lane high way driving, it doesn’t teach the (sub)system a lot really.

2) failure case data, which is most valueable during dev. usually the road test team record the whole data from sensor input to control output, as well as many intermediate outputs. what means a failure case, is the (sub)system (real) output doesn’t correspond to expected (ideal) output, which is a clue saying something wrong or miss-handled of the current-version ADS system. of course, there are large mount of normal case data.

3) ground truth(G.T.) data, to tell the case as either normal or failure, needs an evaluation standard, namely GT data. there are different ways to get GT data in RD stage and massive-producing stage. in RD stage, we can build a GT data collecting vehicle, which is expensive but highly-valued, of course it’s better to automatically generate G.T. data, but manually check is almost a mandatory. a low-efficient but common way to get GT data by testing driver’s eye. after data collection, usually there is off-line data processing with the event log by the driver. so the offline processing can label scenarios as normal case or failure case, usually we care more about failure case. in massive-producing stage, there is no GT data collecting hardware, but there is massive data source to get a very high-confidence classifer of failure or normal.

4) sub-system verification, is another common usage of data, e.g. fusion module, AEB module, e.t.c. due to the limitation of existing sensor model and realistic level of SiL env, physical sensor raw data is more valued to verify the subsystem, which including more pratical sensor parameters, physical performance, physical vehicle limitation e.t.c, compared to synthetic sensor data from simulator, which is either ideal or statistically equal, or too costing to reach physical-level effect.

5) AI model training, which consume huge data. in RD stage, is difficult to get that much of road data. so synthetic data is used a lot, but has to mixed a few portion of physical road data to gurantee no over-fit with semi-data. on the other hand, tha’s a totally different story if someone can obtain data from massive-producing fleet, as Telsa patented:SYSTEM and METHOD for obtaining training data: An example method includes receiving sensor and applying a neural network to the sensor data. A trigger classifier is applied to an intermediate result of the neural network to determine a classifier score for the sensor data. Based at least in part on the classifier score, a determination is made whether to transmit via a computer network at least a portion of the sensor data. Upon a positive determination, the sensor data is transmitted and used to generate training data.

which is really an AI topic to learn from massive sensor data to understand a failure case.

6) AI model validation, validation should depends on labeled valid dataset, e.g. G.T. data, or data verified from existing system, e.g. some team trust mobileyes output as G.T. data.

7) (sub)system validation

SiL sematic driven

this mostly correspond to model based dev, there are a few issues about sematic driven SiL:

1) build realistic-close sensor model, but how realistic it is compared to the real physical sensor ? 80% ?

2) virtual env from the SiL simulator, based on the virtual modeling ability.

3) 1) + 2) –> synthetic sensor raw data, which may have about 60%~80% realistic, compared to road test recording data

4) is there system bias of virtual/synthetic world ?

during RD road test, we can record the failure scenario as semantic metadata(such as kind of OpenX/Python script), as well record the whole sensor & CAN data.

with semantic metadata, we import it to the SiL simulator, inside which create a virtual world of the senario. if the sensor configuration (include both intrinsic and extrinsic) in simulator is the same as the configurations in real test vehicles, and our sensor model in simulator can behave statistically equal to the real sensors, check sensor statistical pars, so it’s almost a satistically realistic sensor model.

sematic scenario description framework(SSDF) is a common way to generate/manage/use verification(functional and logic) scenarios libs and validation (concrete) scenarios libs. the benefits about SSDF is a natural extension of V-model, after define system requirements, and generate test cases based on each user case, namely sematic scenarios.

but as we mentioned above, how precision the SiL performance and especially when comes to statiscally equal sensor model, namely, how to validate the accuracy loss or even reliability loss gap between synthetic and real envs, which is usually not considered well in sematic scenario based SiL.

no doubt, synthetic data, or pure semantic scenario has its own benefits, namely fully labeled data, which can be used to as ground truth in virutal world or as input for AI training. again, we need to confirm how much realiabitliy these labeled data are, before we can 100% trust them.

Ideal ground truth/probabilistic sensor models are typically validated via software-in-the-loop (SIL) simulations.

Phenomenological/physical sensor models are physics-based. They are based on the measurement principles of the sensor (i.e. camera uptakes, radar wave propagation) and play a role simulating phenomena such as haze, glare effects or precipitation. They can generate raw data streams, 3-D point clouds, or target lists.

sensor statistically evaluation

sensor accuracy model, kind of obeying exponential distribution, along side with the distance in x-y plane. further the sensor accuracy is speed、scenario etc depends.

sensor detection realiability(including false detection, missing detection), kind of obeying normal distribution , further can speicify obstacle detection realiability and lane detection realiability.

sensor type classification realiability, kind of normal distribution

sensor object tracking realiability, kind of normal distribution

vendor nominal intrinsic, there is always a gap from vendor nominal intrinsic to the sensor real performance. e.g. max/min detection distance, FOV, angle/distance resolution ratio e.t.c. so we can use the test-verified sensor parameters as input for sensor model, rather than the vendor nominal parameters.

as mentioned, there are lots of other factors to get a statistically equal sensor model, which can be considered iteration by iteration.

the idea above is a combination of statistical models, if there is a way to collect sensor data massively, a data-driven machine learning model should be better than the combination of statistical models.

data is the new oil

at the first stage, we see lots of public online data set, especially for perception AI training, from different companies e.g. Cognata, Argo AI, Cruise, Uber, Baidu Apollo, Waymo, Velodyne e.t.c.

for a while, the roadmap to AI model/algorithms is reached by many teams, the real gap between a great company and a demo team, is gradually in build a massive data collection pipeline, rather than simply verify the algorithm works. the difference is between Tesla and the small AI team.

this will be a big jump from traidional model based mindset to data-driven mindset, including data driven algorithm as well as data pipeline.

in China, the data center/cloud computing/5G is called new infrastructure, which definitely accelerate the pocessing of building up the data pipline from massively fleet.

refere

what are false alerts on a radar detector

surfelGAN: synthesizing realistic sensor data for AD from DeepAI

data injection test of AD through realistic simulation

BMW: development of self driving cars